1. Introduction

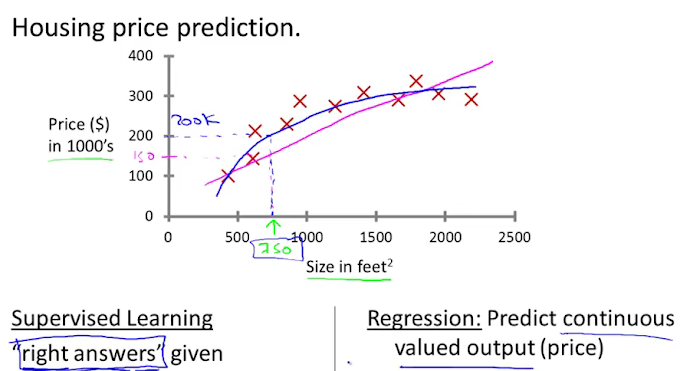

While a single gaussian is limited. A mixture is a combination of simpler “component” distributions, which can approximate any distribution with an accuracy proportional to the number of components. It can be applied in classification, image segmentation and clustering.

A gaussian mixture model with K components:

The goal is to learn the means μk , covariances Σkand priors πk using expectation maximization (EM).

1. Random initialization the parameters of the component distributions

2. Repeat until converged

a. Assign the probability of each data point under the component parameters

b. Recalculate the parameters based on the estimated probabilities

Using The Multivariate Gaussian to compute the relative likelihood of each data point under each component.

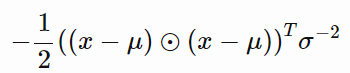

we can simplify:

where ⊙ represents element-wise multiplication and σ−2 is our vector of inverse variances.

Instead of calculating likelihood function directly, we use log function to calculate Log-likelihood.

Log-likelihood function is a logarithmic transformation of the likelihood function. Because logarithms are strictly increasing functions, maximizing the likelihood is equivalent to maximizing the log-likelihood. But for practical purposes it is more convenient to work with the log-likelihood function in maximum likelihood estimation.

become

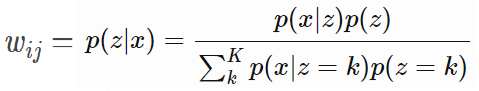

In order to update the parameters we apply Bayes rule:this is called membership weights

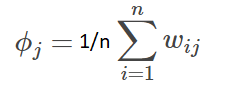

The probability of each class ϕ

2.1 Clustering

Weaknesses of k-Means

- k-means model has no intrinsic measure of probability of cluster assignments

- k-means model places a circle at the center of each cluster and radius is the most distant point in the cluster so the cluster models are circulars. k-means is not good for oblong or elliptical clusters:

Gaussian mixture models come to the rescue.

A simple GMM example:

from sklearn.datasets.samples_generator import make_blobs

import numpy as np

import matplotlib.pyplot as plt

import seaborn as sns

sns.set()

from sklearn.mixture import GaussianMixture

X, y_true = make_blobs(n_samples=300, centers=4, cluster_std=0.60, random_state=0)

gmm = GaussianMixture(n_components=4).fit(X)

labels = gmm.predict(X)

plt.scatter(X[:, 0], X[:, 1], c=labels, s=40, cmap='viridis');

plt.show()

probs = gmm.predict_proba(X)

A GMM example for oblong or elliptical clusters:

Let's consider the covariance type of sklearn.mixture.GaussianMixture:

covariance_type="spherical" constrains the shape of the cluster such that all

dimensions are equal

covariance_type="diag" which means that the size of the

cluster along each dimension can be set independently

covariance_type="full" which allows each cluster to be modeled as an ellipse with

arbitrary orientation (computa‐

tionally expensive)

from sklearn.datasets.samples_generator import make_blobs

import numpy as np

import matplotlib.pyplot as plt

import seaborn as sns

sns.set()

from sklearn.mixture import GaussianMixture

X, y = make_blobs(n_samples=1500, random_state=170)

transformation = [[0.60834549, -0.63667341], [-0.40887718, 0.85253229]]

X_aniso = np.dot(X, transformation)

gmm = GaussianMixture(n_components=3, covariance_type="full").fit(X_aniso)

probs = gmm.predict_proba(X_aniso)

labels = gmm.predict(X_aniso)

plt.scatter(X_aniso[:, 0], X_aniso[:, 1], c=labels, s=40, cmap='viridis');

plt.show()

2.2 GMM as Density Estimation

If Gaussians is not used to find separated clusters of data. It can be used to model the overall distribution of the input data.

We try to model the distribution of data:

from sklearn.datasets import make_moons

import numpy as np

import matplotlib.pyplot as plt

import seaborn as sns

sns.set()

from sklearn.mixture import GaussianMixture

X, y = make_moons(n_samples=100, noise=0.1)

gmm = GaussianMixture(n_components=10, covariance_type="full").fit(X)

Xnew = gmm.sample(400)[0]

plt.scatter(Xnew[:, 0], Xnew[:, 1])

plt.show()

If number of components is 2. The generated distribution is not matched.

If number of components is 10. The generated distribution is quite matched.

How to choose number of components?

This choice of number of components measures how well

GMM works as a density estimator, not how well it works as a clustering algorithm.

Using some analytic criterion such as the Akaike information criterion (AIC) or the Bayesian information criterion (BIC).

Some information will be lost by using the model to represent the process. AIC estimates the relative amount of information lost by a given model: the less information a model loses, the higher the quality of that model.

When fitting models, it is possible to increase the likelihood by adding parameters, but doing so may result in overfitting. BIC attempts to resolve this problem.

The optimal number of clusters is the value that minimizes the AIC or BIC depending on which approximation we wish to use.

Consider the example above:

BIC recommends number of components is 6.

AIC recommends number of components is 17.

0 Comments